Unless you've been living under a rock, you already have heard of ChatGPT and have probably heard about its various abilities which range from writing code, fixing bugs, writing essays, scripts for videos, coming up with recipes and so on. And so, I decided to give it a try, to figure out how good it is. The following is my experience building a simple FastAPI app entirely with ChatGPT.

Introduction

FastAPI is a Python framework that helps us to build APIs super fast. For this experiment, I decided to build an API with 3 endpoints:

A "Hello World" message

Adding items to a collection

Viewing all items in the collection

I will be writing what ChatGPT told me in response to my questions and attaching screenshots of the conversation going on.

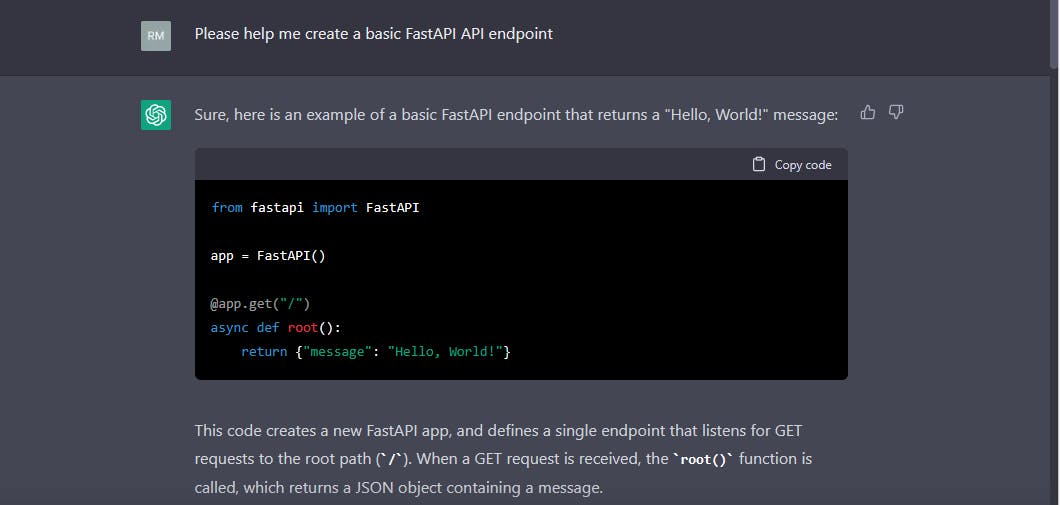

A simple "Hello World" endpoint

I started with a simple question to ChatGPT.

Me: Please help me create a basic FastAPI API endpoint

ChatGPT: Sure, here is an example of a basic FastAPI endpoint that returns a "Hello, World!" message:

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

async def root():

return {"message": "Hello, World!"}

It goes on to explain:

This code creates a new FastAPI app, and defines a single endpoint that listens for GET requests to the root path (

/). When a GET request is received, theroot()function is called, which returns a JSON object containing a message.You can run the app using the command

uvicorn main:app --reload(assuming the file is namedmain.py) and test it by visitinghttp://localhost:8000/in your web browser.

As you can see, ChatGPT knows how to create a simple API endpoint using FastAPI. What is super amazing is that it tells me what the code does and how to run it.

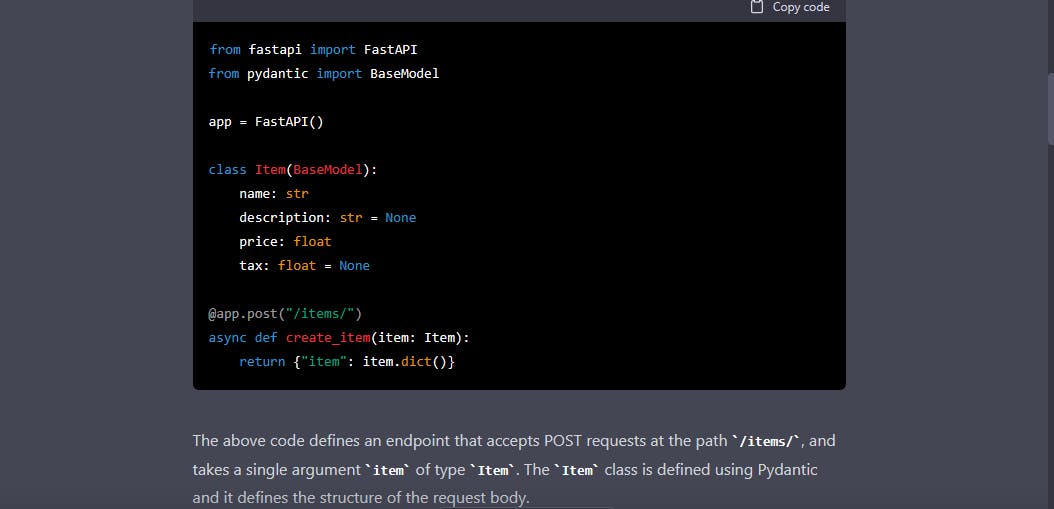

A POST endpoint

Along with the "Hello World" endpoint, ChatGPT gave me a POST endpoint and used pydantic to generate the request schema.

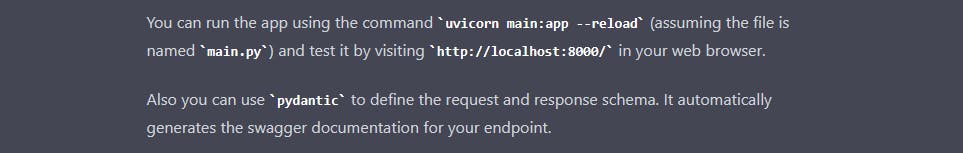

ChatGPT: Also you can use

pydanticto define the request and response schema. It automatically generates the swagger documentation for your endpoint.

from fastapi import FastAPI

from pydantic import BaseModel

app = FastAPI()

class Item(BaseModel):

name: str

description: str = None

price: float

tax: float = None

@app.post("/items/")

async def create_item(item: Item):

return {"item": item.dict()}

ChatGPT: The above code defines an endpoint that accepts POST requests at the path

/items/, and takes a single argumentitemof typeItem. TheItemclass is defined using Pydantic and it defines the structure of the request body.

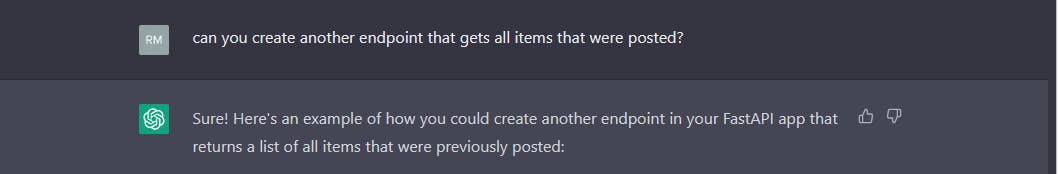

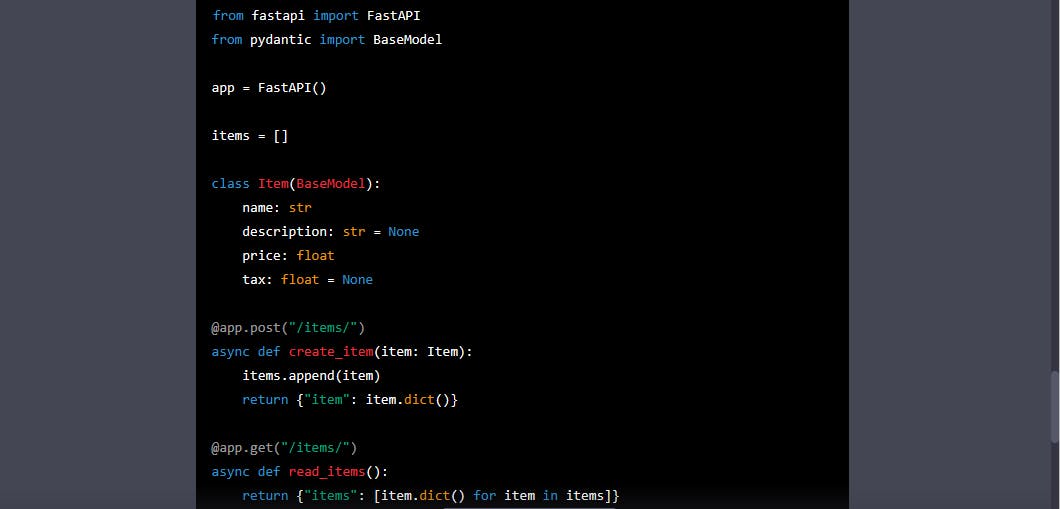

Asking for another endpoint

I asked ChatGPT to generate another endpoint with some context to the previous answer

Me: can you create another endpoint that gets all items that were posted?

ChatGPT: Sure! Here's an example of how you could create another endpoint in your FastAPI app that returns a list of all items that were previously posted:

And it was perfectly done, with explanations and everything.

from fastapi import FastAPI

from pydantic import BaseModel

app = FastAPI()

items = []

class Item(BaseModel):

name: str

description: str = None

price: float

tax: float = None

@app.post("/items/")

async def create_item(item: Item):

items.append(item)

return {"item": item.dict()}

@app.get("/items/")

async def read_items():

return {"items": [item.dict() for item in items]}

The above code defines a new endpoint that listens for GET requests to the path

/items/. When a GET request is received, theread_items()function is called, which returns a JSON object containing a list of all items that were previously posted using thecreate_itemendpoint.The items are stored in a simple list called

itemswhich is defined at the top of the file. Every time thecreate_itemendpoint is called, the item is appended to the list. Theread_itemsendpoint just returns the list of items.Please note that in the above example, the data is stored in memory and will be lost once the server is shut down. In a real-world scenario, you would want to store the data in a more persistent storage such as a database.

Also, please note that this is just an example and this is not a good practice to store data like this.

Let us do something more

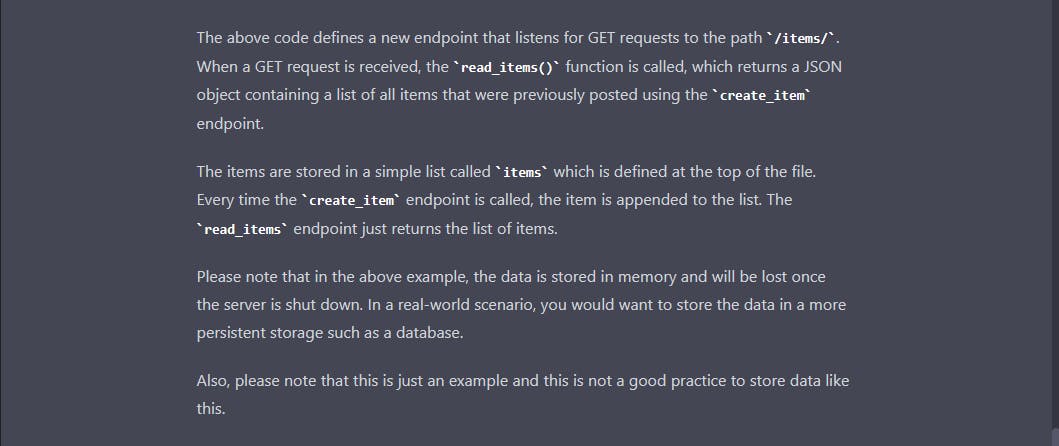

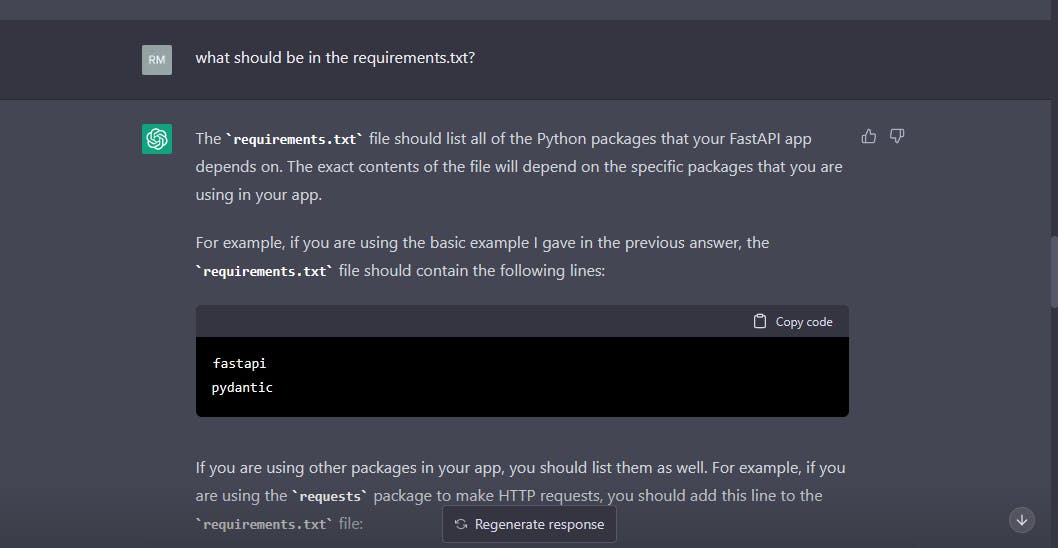

I went on to ask ChatGPT about the requirements.txt file which is important for dependency management in Python.

Me: what should be in the requirements.txt?

ChatGPT: The

requirements.txtfile should list all of the Python packages that your FastAPI app depends on. The exact contents of the file will depend on the specific packages that you are using in your app.For example, if you are using the basic example I gave in the previous answer, the

requirements.txtfile should contain the following lines:

fastapi

pydantic

If you are using other packages in your app, you should list them as well. For example, if you are using the

requestspackage to make HTTP requests, you should add this line to therequirements.txtfile:

requests

You can also use a tool like

pip freezeto generate therequirements.txtfile. This command lists all of the packages and versions currently installed in your environment, and can be useful when you are not sure which packages you need to include.For example, you can run

pip freeze > requirements.txtin your command line, this will generate therequirements.txtfile with all the packages that are installed in your virtual environment.Please make sure that you are running these command inside the virtual environment which you are going to use to run your application.

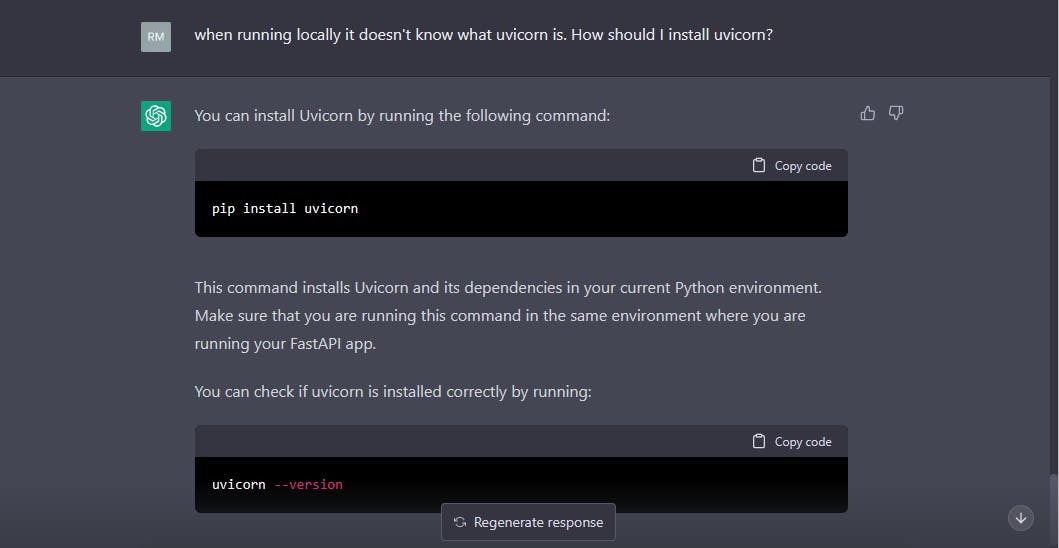

Solving errors

One more awesome thing about ChatGPT is that it can also help if we encounter an error. For example, it asked us to run the app with uvicorn main:app --reload. If we do not have uvicorn installed, we will get an error here. We can ask ChatGPT to help us with this.

Me: when running locally it doesn't know what uvicorn is. How should I install uvicorn?

ChatGPT: You can install Uvicorn by running the following command:

pip install uvicorn

This command installs Uvicorn and its dependencies in your current Python environment. Make sure that you are running this command in the same environment where you are running your FastAPI app.

You can check if uvicorn is installed correctly by running:

uvicorn --version

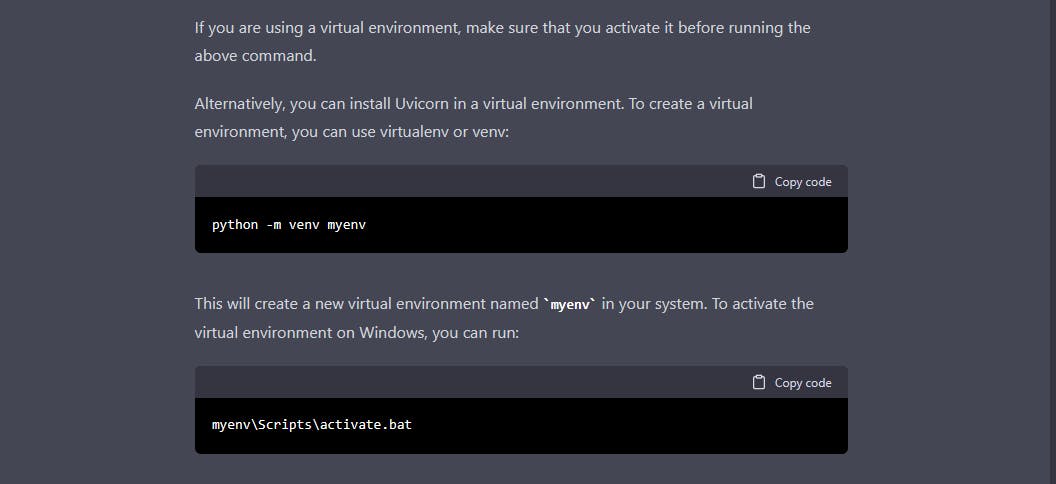

If you are using a virtual environment, make sure that you activate it before running the above command.

Alternatively, you can install Uvicorn in a virtual environment. To create a virtual environment, you can use virtualenv or venv:

python -m venv myenv

This will create a new virtual environment named

myenvin your system. To activate the virtual environment on Windows, you can run:

myenv\Scripts\activate.bat

Or on Linux/macOS:

source myenv/bin/activate

Once your virtual environment is activated, you can install uvicorn as usual.

It gives us all the instructions along with relevant guidelines. This makes it super powerful and useful.

Final thoughts

I think ChatGPT is an awesome tool that can be leveraged to gain a huge advantage in a developer's life. It will take away the need to do mundane tasks repeatedly and allow us to focus on building new features and collaborating. This experiment I did was very simple and ChatGPT may fail at more complex tasks. But using it effectively and responsibly is in our hands.

You can find the code in the GitHub repo here. I had to change the code a little to get everything working together but most of it is exactly as written by AI. Thank you for reading this blog post. Until next time, goodbye and happy learning.